Common rules

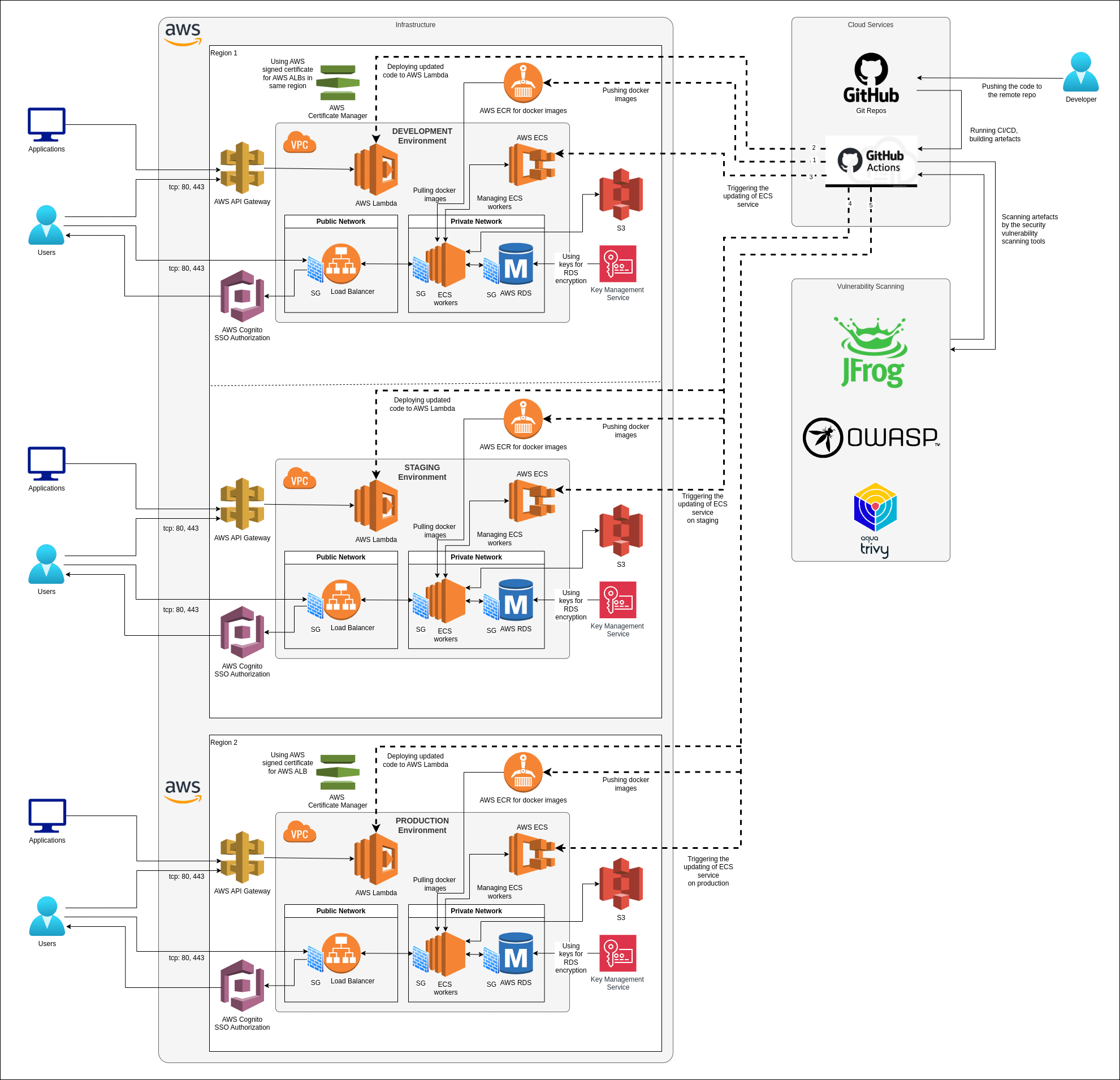

DevOps at Onix is responsible for the infrastructure for web projects.

Most often, a DevOps engineer is part of the development team. In this case, it has the main tasks:

- Deploy a web application on the production

- Deploy and configure the environment for CI / CD development

At the beginning of a new project, the client can form the following types of tasks:

- Create a new infrastructure for the new project

- Improve the infrastructure for the existing project

- Infrastructure migration from one Cloud provider to another (AWS, Azure, GCloud, DigitalOcean, AliCloud, Dedicated server, VPS)

At the beginning of the work, we determine the priorities of the client. What they can be.

- The price.

- High load.

- All process automation.

- To organize the process of continuous integration and continuous delivery.

Having identified priorities and tasks, depending on the time priorities, we plan the work.

- Access to cloud services. You need to get access to the client’s account and access. If it’s a Cloud Provider, then the client registers their account and then invites team members with the access they need to work. Thus, everything is done in the client’s account, he controls the costs and can discuss them with the team in a timely manner.

- Access to the repositories. Access repositories with application code to run. A separate repository is created for devops engineer scripts, which are responsible for automation.

- How to run. The DevOps engineer’s next step is to access the application and determine what resources are needed to work and what dependencies.

- Run locally. The next step is to run the application locally in the docker environment to understand how it works.

- Environment for developers. Then we create the Vagrantfile for developers. It provides an equal local environment for all developers. This local env close to the production as possible to prevent different behavior of the application.

- Staging Server. The server for the software engineer and QA team. All the same as the Production Server to test all changes before the update.

- Pipeline for development.

The changes in the existing project

On the existed project we start our work to collect the information.

- Access to servers, services, and repositories.

- Investigate the documentation and README.md files in the repositories.

- Run all locally by instruction and see how it works.

- Investigate all environment configurations, accesses, servers, databases

In the next step, we prepare the plan for changes and optimizations on how to upgrade the infrastructure, fix the problems without downtime, and continue to develop the project.

Save access to different systems

There are several different types of secrets on the project.

We support the principle that everyone in the project has access to a minimum of access, which is enough to handle it.

All accesses are issued in person, everyone in the team is responsible for their access to resources.

All the access stored in a separate folder in Google Docs and shared with the client.

Secrets for the project

There are different secret variables for the different environments. For example database access, SMS gateway, s3 buckets, mailing list, access to third-party services API, and more.

In an environment for developers, all this data is available for rapid development.

In the production, most of the changes are not available to developers. Some of them generated automatically and stored only inside an environment, the other available only for the support team.

The monitoring

To have real-time information on how a project works we are using different monitoring systems. In base cases, we can use an AWS system like CloudWatch.

When we need more information and the ability to have a custom configuration we are using zabbix server, adding custom rules for notification and all responsible people in the project get notifications depends on rules. For example CPU loading, server or DB loading, the amount of traffic, and others.

Now we have the case that is suitable for most of our clients is NewRelic https://newrelic.com/. New Relic is a California-based technology company which develops cloud-based software to help website and application owners track the performances of their services. Thay have free plan for small companies https://newrelic.com/pricing.

Infrastructure as a code

Our DevOps team supports the movement Infrastructure as code. All our processes must be repetitive. To manage infrastructure we writing a code, store it in the repository (ansible, terraform). This provides us the ability to use scripts again and again and reduce manual operations.

Technology Stack

- System Administration

- Cloud Providers

- Amazon Web Services

- MS Azure

- Google Cloud

- Digital Ocean

- Heroku

- VPC

- Dedicated Servers

- Virtualization

- Docker

- VMware

- Vagrant

- Package Management

- Composer

- Npm

- Yarn

- Brew

- Orchestration systems

- Kubernetes

- Ansible

- Chef

- Puppet

- Terraform

- Bash scripting

- Docker Swarm

- Web Servers

- Apache

- Nginx

- Monitoring Systems

- Icinga

- Graphite

- Graphana

- Tools

- Tomcat

- Maven

- Jenkins

- Zabbix

- Vault Hashi Corp

- Teamcity

- Travic

- GitLab CI

- Bitbucket CI

- Circle CI

- Server and container OS

- Ubuntu

- CentOS

- RedHat