Challenge:

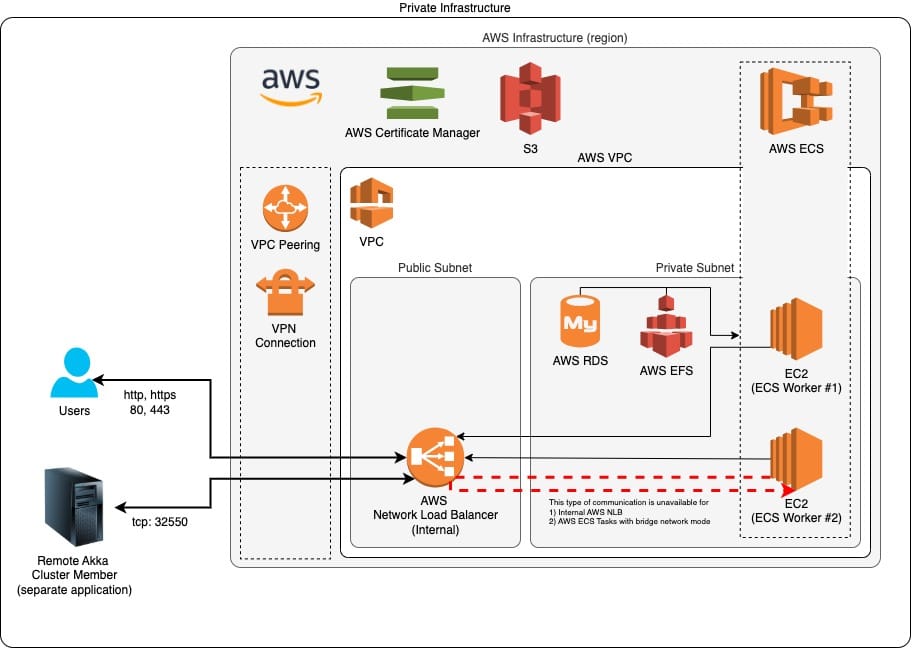

We deployed an AWS ECS Cluster using Terraform, with multiple application components communicating via AWS Network Load Balancer (NLB). The NLB was chosen because the application used a custom protocol (AKKA Cluster Discovery Protocol over TCP), which AWS ALB does not support.

Initially, everything worked fine with an external-facing NLB, but the client requested to restrict access to the application to their internal network only. This required switching the NLB type to internal. After doing so, external access was restricted as expected, but some application components within ECS lost connectivity.

Investigation and Root Cause:

- Issue: Components running on the same physical AWS ECS worker could not communicate via the internal AWS NLB.

- Observation: Components running on different ECS workers could still communicate.

- Root Cause: AWS NLB does not support loopback traffic—a container on a worker cannot reach another container on the same worker via the NLB.

- Confirmation: The issue was verified through deep analysis and discussions with AWS Support.

Solution:

- Switched ECS network mode to awsvpc instead of the default bridge mode.

- Ensured each container received its own private IP address and connected directly to the NLB using its own IP.

- This allowed all components to communicate consistently via the internal NLB, regardless of whether they were on the same or different ECS workers.

Final Outcome:

- Fully functional internal-only ECS Cluster with NLB.

- All components successfully connected, ensuring high availability and security.

- If HTTP/HTTPS was used instead of Akka, AWS ALB would have been the ideal choice since it supports security groups, but NLB was required for the custom TCP protocol.

This project demonstrated the importance of understanding AWS networking intricacies, especially when using NLB with internal-only traffic in ECS.🚀