We have lately assisted a client in migrating their application written in Ruby on Rails (RoR) from Heroku to Amazon Web Service (AWS). The migration was motivated by the developing app’s need for greater capacity and flexibility at a minimal cost. It was a production app without testing servers, so there was no room for error.

Some context about Heroku

Heroku is a cloud platform provided by Salesforce. Although customer-centric, it includes certain configurations that customers cannot change. Developers still like Heroku because it helps them run their applications quickly, without focusing on infrastructure configuration too much. It’s especially appealing in the case of small startups whose primary goal is to launch and start working fast.

However, as companies grow and their needs become more complex, migration from Heroku to AWS becomes increasingly relevant. Heroku’s languages and environments are also limited. If it doesn’t support the runtime or components a company wants to grow on, there’s no choice but to migrate to AWS. AWS’ flexible configuration and various services make it a more attractive option that lets customers create more sophisticated, robust, and cost-effective systems.

A few reasons for migration from Heroku to AWS

Convenience

If you host other cloud services on AWS and want to keep everything in one place, switching to AWS may be more convenient.

Cost

On Heroku, applications run in containers called “dynos.” Only one service can run on each. Additional dynos cost $25-50 each, so if your workload grows to the point where you need multiple dynos, your bills will go up. AWS charges you only for what you use, and with larger applications and infrastructure, it quickly becomes a more affordable option. AWS also offers many tools and services for cost optimization and control.

Location

Heroku caters to four or five regions, whereas the AWS cloud covers 81 access zones in 25 geographical regions around the world, with announced plans to create another 21 access zones and 7 more AWS regions in Australia, India, Indonesia, Israel, Spain, Switzerland, and the United Arab Emirates (UAE).

The breaking point

Initially, our client’s app ran on Heroku well. However, as traffic increased, the project began to experience problems and limitations.

Performance

The biggest problem is the poor use of dynos’ server resources. Each dyno is limited in CPU performance and memory resources: Heroku runs multiple dynos on a single Amazon EC2 instance. Heroku’s configuration plans strictly relate to the structure of server resources, and such limitation of resources is important and justified. All configurations are different, and each requires an appropriate profile of CPU/memory usage. When a customer upgrades their plan to increase memory, it turns out they have paid for the extra unused CPU power. When you need to scale your application, the cost becomes increasingly evident.

Control

Heroku requires the installation of special software that is not easy to use. Neither is it possible to connect to dynos via SSH, for example, to fix the processor or memory issues.

Reliability

Heroku also turned out to have reliability issues. Heroku’s deployment API seemed to fail once or twice a week.

Cost-effectiveness

Generally, Heroku has become more expensive and labor-intensive than it used to be.

Overcoming these challenges required that the company should abandon Heroku and find a new hosting provider.

How the migration occurred

Instead of wasting effort and money setting up dynos, we decided to deploy the application in AWS Elastic Beanstalk using Docker and easily scale it.

AWS seemed like the go-to solution: its virtual server instances come in a wide variety of CPU and memory configurations, have full root access, and offer better performance than Heroku.

Migrating a working application from Heroku seemed like a daunting task. Luckily, using Docker facilitates moving to AWS; it allows packaging the application with all its dependencies in a container environment that can run on any Linux system. Once built, Docker containers can run anywhere. Docker containers encapsulate the Linux virtual file system, providing much of the portability and isolation that a virtual machine offers. The main difference is in the size of resources. As a result, the lightweight containers can load in minutes. Choosing Docker, we knew that it would be key to speeding up the migration to AWS.

The first thing we did was comparing the costs of using AWS and Heroku. Heroku had cost around $800 per month. We calculated that using the same capacity on AWS would cost $600-650. (It’s worth mentioning that having migrated to AWS and monitored the load, we reduced the capacity of servers and services, cutting the costs down to nearly $450 per month.) We also developed a chart of the application and interactions with all services.

Next, we wrote a configuration called Dockerfile; it resembles bash and is easy to understand. Docker images can be based on other images and stacked in layers.

In our RoR application, the main task was to make dependencies get installed and configure the complex software components in our stack. This can be a tedious trial-and-error process, but the ability to test everything in a local environment was a huge advantage. The deployment of a new application in Docker doesn’t take much time, which significantly accelerates the development. When Ruby had to be upgraded from version 2.3.3 to 2.4.10, the transition took no more than an hour, dispelling any doubts about Docker.

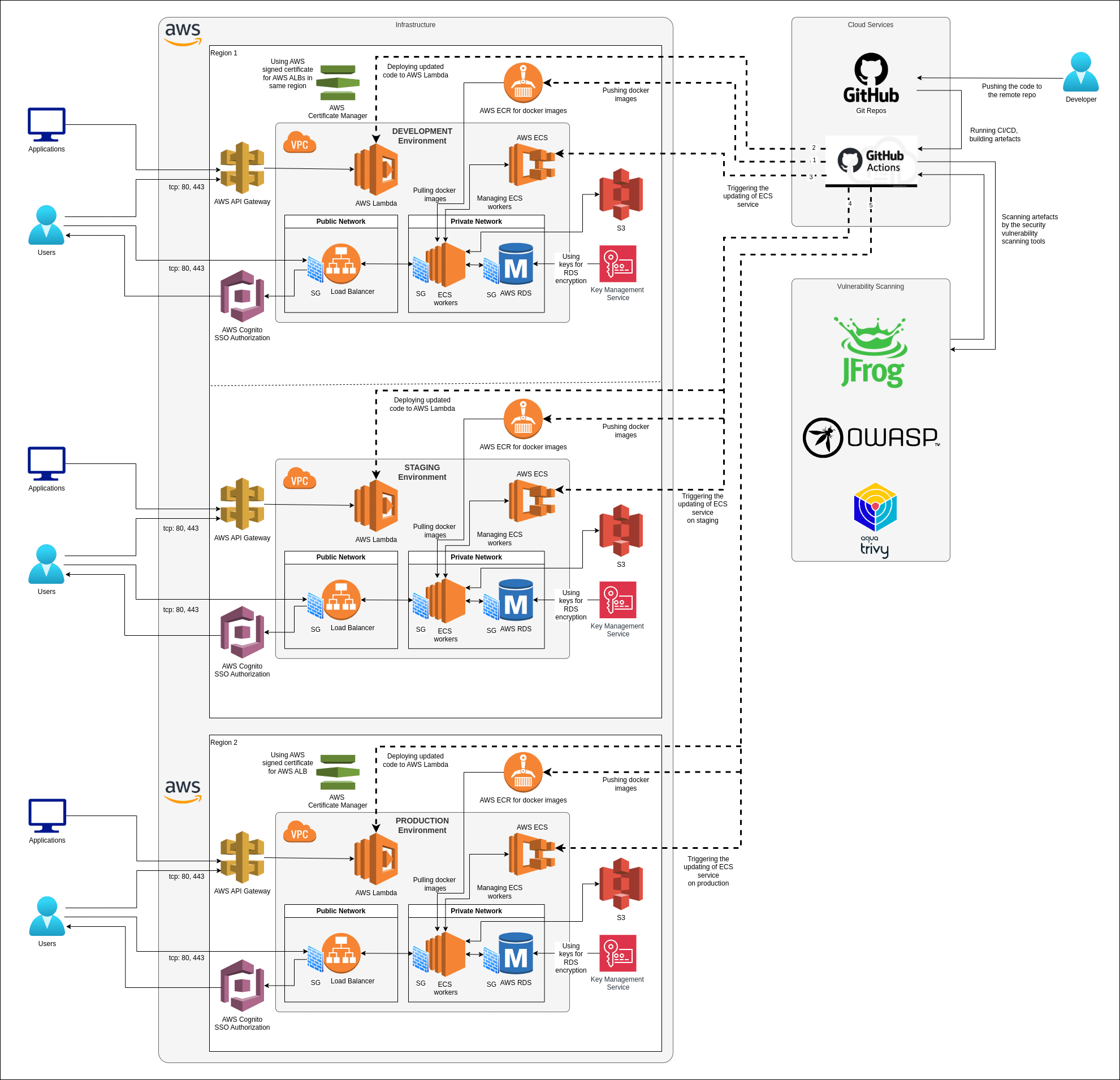

The second thing to do was configuring the environment in AWS. To save time, we decided to use Elastic Beanstalk that comes with different versions of built-in Docker. Dockerization was simple and mainly consisted of creating a Docker image from code in the repository. The basis of the app’s Dockerfile was also used for the dockerization of the worker (sidekiq).

All in all, the migration from Heroku to AWS took one DevOps engineer nearly three weeks to complete. This included learning Elastic Beanstalk, integration, and deployment of the services (Elasticsearch, RDS Postgres, Memcached, Redis, S3, Load balancer, Cloudflare, WordPress), setting up CI/CD (CodeDeploy and GitHub Action), and testing which tools a bulk of the time.

The most valuable part is Docker that works locally and will also work in a production environment.

In the new Docker and AWS-supported environment, we have achieved greater flexibility and productivity compared to the environment that worked on Heroku. Servers don’t require manual scaling any longer: they automatically adjust to rises and falls in the load on the memory or processor. Hosting bills have also decreased.

The new degree of control, such as login via SSH, has proven very useful: it is much easier to detect and fix problems now.

Conclusion

Apparently, our client is happy with the decision to move to AWS. It resulted in improved server performance and greater control over the infrastructure in a short time. Another great achievement in developing and setting up Docker locally, knowing that it will work the same way in any environment.

Portability is another gain. Instead of being bound to a single provider, it’s possible to deploy the application easily to any cloud infrastructure eventually. Docker grants developers greater control over how they deploy programs.

The robust and promising Docker technology was the key reason we managed to switch from Heroku to AWS quickly and with minimal effort.