SOA project’s components

The project contains the next list of components:

- Application with http access (Python applications based on Tornado framework and go application with http access to them).

- Applications (Python application) that read queries and execute necessary actions.

- Hashicorp Consul (https://www.consul.io/) service for automatic discovery of services.

- Ebay Fabio (https://github.com/fabiolb/fabio) is used as http, https load balancer between all registered microservices. It has native support to read data from consul.

All microservices are wrapped into Docker environment. To decrease the size of images and time for deployment we are always trying to use only artifacts. For example, go applications require a lot of dependencies to compile it, but for running the application can be used slim Docker image (for example Debian-slim) and compiled a binary file.

There were situations when docker image with all go dependencies took more than 1GB. After pulling binary as artifact and pushing it into a slim image, it is possible to get Docker image that takes only 50~100MB. With such docker image size deploy process minimized.

Configuration

To store important and secret configuration for applications is used consul or vault (https://www.vaultproject.io/) as main configuration storage. Also, it is possible to use the only consul as configuration storage with internal ACLs to grant access for applications. When the vault is used as storage, it gives a flexible and powerful management of applications access to stored configuration.

Consul is fault tolerance application and that’s why vault was configured to store its data in it. To get access to the secret data, it is required only to know secret token to decrypt the data, that was stored by another vault application from the infrastructure.

Other additional configuration, for example, what routes must be registered to get access to the application, is stored in the global environment for each of services. In global environment can be stored different not secret data, for example health checks URLs, health checks interval, consul address, main load balancer address, etc).

Update and run

To update the application it is required to rebuild the image and recreate the container for production mode. For development mode, all code is mounted into Docker containers.

When it is necessary to stop an instance of the application, consul registrator sees this and tells consul to deregister this instance. After this when an instance is unreachable, Fabio load balancer (https://github.com/fabiolb/fabio) receives this event and removes a required instance from the route table from the specific registered route, that application was assigned before.

When the new instance of the application is launched, consul registrator service (http://gliderlabs.github.io/registrator/latest/) tells consul to register a new instance of the application with required configuration, that is stored in service global environment.

For development is used Docker environment also, but only by using docker-compose with saving all infrastructure components and scheme. If a developer uses not native Docker OS, he can use Vvagrant environment to run the project.

Applications are configured to be scaled automaticall if Cloudwatch fix the issue of a lot of loading. All triggers are created in AWS Cloud Watch and assigned to AWS EC2 and ECS auto scaling policies.

Load balancing

Such scheme can be launched using AWS Application Load Balancer as main access point to the application, but unfortunately because of its hard limit and it is impossible to use it, when count of routes are more than 10. Fabio load balancer does not have such limit.

If it is necessary to launch more than one of application instance, for example two or three, all of these instances will be registered by consul and added to route table with one route by Fabio and in management console it is possible to see that all traffic is balanced equally between all of instances of one application.

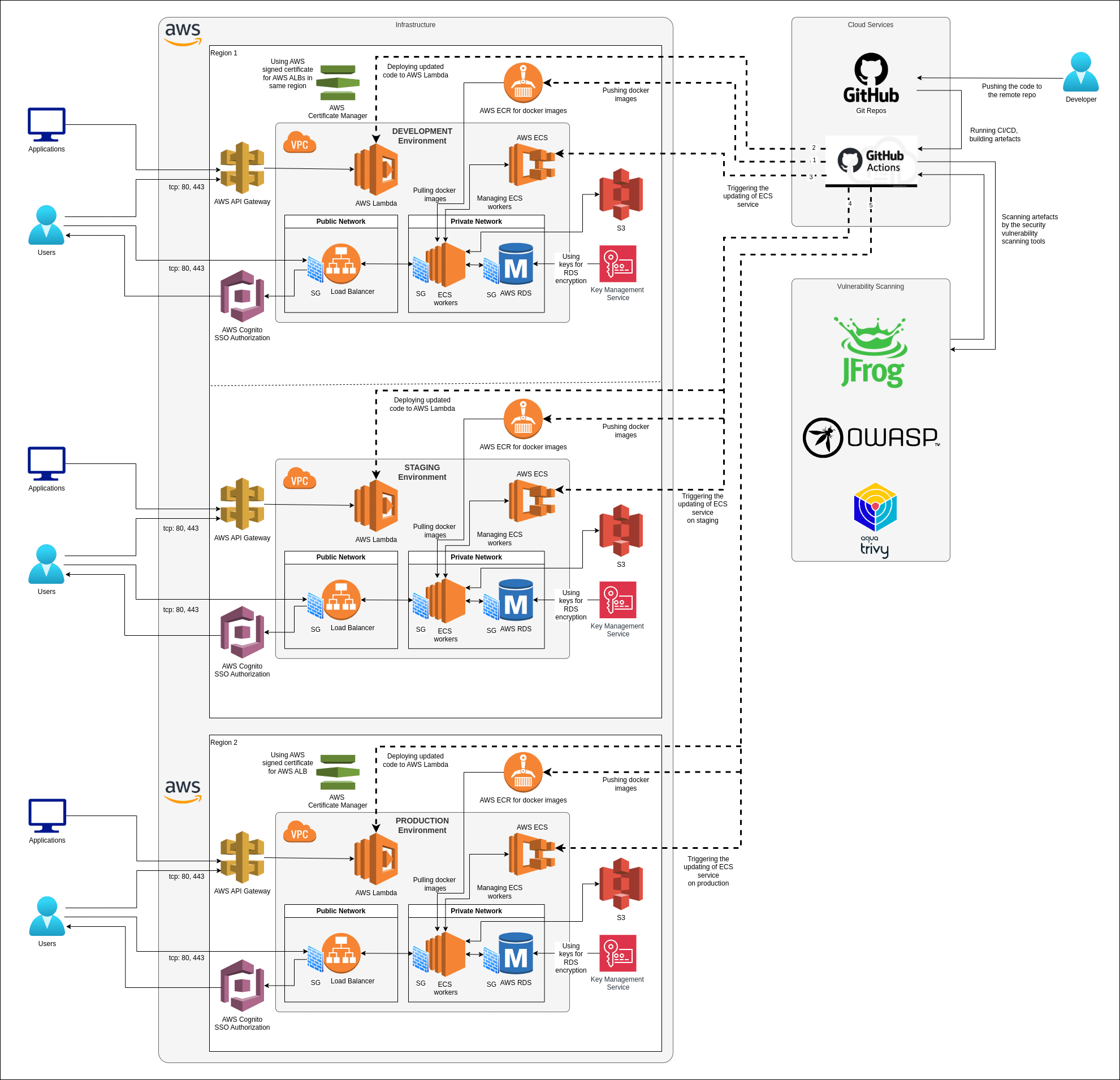

Process to create and launch the project on AWS

To create and launch the project on AWS is used terraform (https://www.terraform.io/) scenario. It is required to describe only configuration file for scenario and use specific terraform version to check all compatibility. If everything is OK client can launch terraform apply command to create all infrastructure elements and project will be launched in 30~40 minutes depends on time that is required to creating and initializing project’s dependencies (RDS instances, docker images, etc).

The common list of objects to create:

- Network infrastructure (VPC, security groups, internet gateway, etc).

- IAM objects (certificates, roles for EC2 instances and ECS services)

- AWS EC2 launch configurations for launching AWS ECS instances.

- Creating custom count of servers to manage the project.

- Describing AWS RDS instances with dynamic parameters (dynamic number of replication instances). To set new count, it is required to update the config file of terraform scenario and apply it again. In 5~10 minutes new replication instance will be created and assigned with application through the updating configuration, that is stored by consul service.

- Describing AWS ECS clusters, ECR registries (for storing docker images), and AWS ECS task definitions and cluster services, that are assigned with task definitions.

- Autoscaling by AWS ECS and EC2 metrics. All autoscaling triggers are assigned with AWS ECS cluster’s services and EC2 auto scaling.

- Cloudwatch triggers to manage autoscaling actions.

Jenkins CI server is used for building and updating applications, that can be integrated into infrastructure as separated microservice also.

For initialize jobs can be used jenkins-job-generator, that creates job from templates. Using such approach, client can not be scared to lost data on Jenkins server, it can be reconfigured till 5~10 minutes and deployment process can be continued without any additional delays.

Production environment are launched on AWS ECS cluster’s EC2 instances and these instances are provisioned with consul, fabio additional services.

Monitoring

AWS API library is used for creating and updating custom AWS Cloudwatch metrics.

For monitoring by the project’s instance is used Zabbix server. It has a flexible configuration to enable custom metrics to collect. Project uses zabbix auto discovery feature to register and watch for instances and docker containers.

Zabbix does not have native possibility to monitor for docker engine and docker containers. To implement such feature our company developed custom metrics, that were encapsulated into each of zabbix agents configuration to enable such feature.

Using custom metrics, that was implemented into Zabbix agents configuration, Zabbix server got an ability to watch for docker engine on each of instances and every docker containers, that were created by AWS ECS agents.